Dynamic Programming in the Real World

An algorithm for Content-Aware Image Resizing plus an introduction to Divide and Conquer Algorithms.

Hey Everyone,

Today we’ll be talking about

An algorithm for Content-Aware Image Resizing

Resizing an image naively can distort the objects in the image (due to the changing proportions).

Content-Aware Image Resizing algorithms try to change the proportions of an image without distorting any of the items in the image.

We talk about one of the most popular Content-Aware Image Resizing algorithms and how it’s implemented with Dynamic Programming

An introduction to Divide and Conquer Algorithms

We’ll give an intro to Divide and Conquer algorithms and go through a couple of examples.

We’ll show you how to calculate the time complexity of a Divide and Conquer algorithm using the Master Method.

Plus, a couple awesome tech snippets on

How Slack designs APIs

How HTTPS works

Understanding functional programming languages

Visualizing a Codebase

Lessons learned from 15 years of open source development.

Interviewing.io is an awesome resource.

Book realistic mock interviews with senior FAANG engineers who will give you detailed and actionable feedback on exactly what you need to work on.

They prepare you for the pressure and stress that comes from an actual interview setting.

You don’t pay anything until you’re hired.

Check them out here.

Sponsored

Content-Aware Image Resizing

When you resize an image, a common issue is that you distort the objects in the image.

If you naively reduce the width of the image on the right by 50%, you end up with the image on the left, which distorts all the objects in the original image.

Content-aware image resizing attempts to preserve the proportions of the objects while changing the image proportions.

Here’s an example where we reduce the image’s width by 50% again, but this time with a content-aware resizing approach.

The left image looks far more natural since the proportions of the balloons were preserved.

The Seam Carving Algorithm

The Seam Carving algorithm is the algorithm behind content-aware image resizing.

The algorithm finds the seam (continuous sequence of pixels) with the smallest contribution to the image content and then removes it (carves it out).

This process repeats over and over again until we get the required image width or height.

You can see the Seam Carving run on the image above.

In the image, the hot air balloon pixels contribute more to the content than the sky pixels. Therefore, the sky pixels are being removed first.

Finding the seam with the smallest contribution to image content is done with dynamic programming.

The image contribution of each pixel is calculated based on it’s color (RGBA value) difference between it and two neighboring pixels.

The smaller the color difference, the less important the pixel is.

The importance of a pixel is referred to as the pixel energy. The more important a pixel is, the more energy it has.

Here’s the formula for calculating a pixel’s energy (importance).

Finding the Minimum Seam (Dynamic Programming)

The Naive Approach

Now, we have to find the path of pixels (the seam) that goes from top to bottom and has the smallest sum of pixel energies.

The naive approach would be to check all possible paths one after another.

This has a time complexity of O(w * 3^h) where w and h are the width and height of the image.

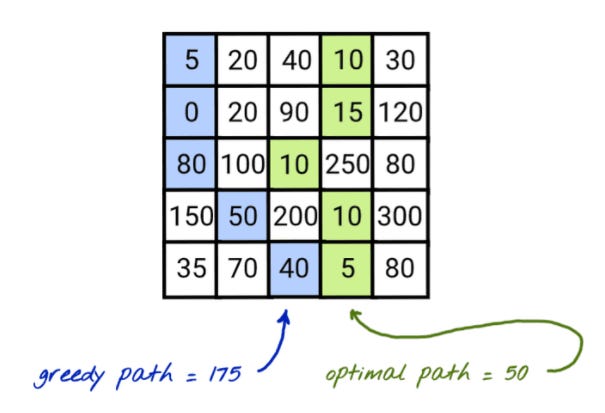

The Greedy Approach

You can also try a greedy approach, where you always pick the pixel with the lowest amount of energy as the next pixel and hope that the resulting seam energy will be the smallest one.

With this approach, you’re not guaranteed to find the seam with the lowest energy.

However, it is fast with a linear time complexity of O(w + h).

The Dynamic Programming Approach

With the naive approach, there’s a ton of repeated computation resulting in an exponential time complexity.

This should be a hint that dynamic programming could be useful here (caching the results of the computations).

We can save the energy of the minimum seam at the particular pixel in an additional seamEnergies table.

We go through the image row by row and for each pixel we calculate the lowest energy seam that ends at that pixel.

There are a maximum of 3 choices: the seam ending at the top left pixel, the seam ending at the top middle pixel, or the seam ending at the top right pixel. We pick the minimum of those 3 and add the current pixel’s energy.

After we’ve found all the seam energies, the minimum seam can be found by taking the minimum of the bottom row of our seam energies table.

After finding the lowest energy seam, we can remove that seam by shifting the pixels to the right of the seam by 1px to the left.

This is a summary of the full blog post by Oleksii Trekhleb.

Check out his blog post for a much more detailed explanation and a full JavaScript implementation.

Tech Snippets

How APIs are Designed at Slack - A great blog post by Slack Engineering going through their design philosophy and process for APIs.

Lessons learned from 15 years of SumatraPDF, an open source windows app

Krzysztof Kowalczyk is the creator of SumatraPDF, an open source e-reader for Windows (lets you read PDF, XPS, EPUB, MOBI, CHZ and other file formats).

He’s been working on SumatraPDF for 15 years now, and he wrote a great blog post on lessons he learned about the code, product and business model of open source.

In the past, Krzysztof worked at Microsoft, Palm, BitTorrent and a few small Silicon Valley startups.

How HTTPS works - An awesome series of comics that explain how HTTPS works (asymmetric key encryption, TLS handshake, SSL, Certificate Authorities, etc.)

Visualizing a codebase - An awesome blog post by Amelia Wattenberger (she’s the author of FullStack D3 and Data Visualization) on how we can build tools to visualize a codebase.

Being able to quickly visualize all the files in a codebase and their relationship to each other could be extremely useful in quickly getting ramped up to a new codebase.

Implementing Functional Languages - This is a free book that will give you a solid understanding of functional programming languages and building compilers for them.

Most of the book is a series of implementations of a small functional language called the Core language.

Divide and Conquer Algorithms

What is Divide and Conquer?

The divide and conquer paradigm breaks your problem down into more sub-problems of the same type, until these become simple enough to be solved directly (the base case). This is the divide step.

Then, it combines the solutions to the subproblems in some form to get the solution to the original problem. This is the combine step.

You can also perform optimizations in the divide or combine steps, resulting in paradigms like Dynamic Programming (storing the answers to subproblems in cache).

Mergesort is a classic sorting algorithm that uses Divide and Conquer to sort a list of numbers.

The red boxes are part of the divide steps. The green boxes are part of the combine steps.

The essence of the implementation of MergeSort is

Now, one issue that comes up is how can you analyze the time complexity of this algorithm?

With divide and conquer algorithms, we’re typically calling the function recursively.

In our MergeSort example, we’re calling the mergeSort function recursively on firstHalf and secondHalf (lines 9 and 10).

We’ll need the time complexity of those two lines to figure out the time complexity of the entire function. This creates a catch-22 of sorts.

Time Complexity of Divide and Conquer Algorithms

The way we can resolve this is through the Master Method.

The Master Method requires us to express our algorithm as a recurrence. A recurrence is an equation that expresses the output as a function of the equation itself.

So, an example is the factorial operation.

n! = 1 * 2 * 3 * ... * ( n - 1) * n

You can express a factorial as a recurrence. The recurrence would be

F(n) = n * F(n - 1)

with 0! = 1 as the base case.

Going back to our MergeSort example…

Let’s express the time complexity as a recurrence.

The base case (lines 3 - 4) will take constant time, O(1).

Both lines of the divide step (lines 6 - 7) will take linear time. That’s because we’re copying over the first half of nums to firstHalf and copying the second half of nums to secondHalf. Therefore both of those steps are O(n).

Now, we have to solve our subproblems (lines 9 - 10). The inputs to both of these subproblems will be half the list, so it can be expressed as M(n / 2) where M(n) is the time complexity of MergeSort. Since we’re calling mergeSorttwice, the entire solving subproblem step will take 2 * M(n / 2).

After that, we have the combine step (line 12). This calls a merge function. The function just iterates through firstHalfSorted and secondHalfSorted and combines the two lists into a final, sorted list. It takes linear time, so another O(n) operation.

The return statement at the end takes constant time.

We can put this together to come up with our recurrence.

M(n) = 2 * M( n / 2) + O(n)

Now, how do we turn this recurrence into a time complexity in Big O notation?

We can use The Master Method.

The Master Method is a simple formula that you can plug your recurrence into, and it’ll output a time complexity.

However, your recurrence must be in standard form in order to use the Master Method.

A recurrence in standard form is a recurrence that looks like

T(n) = a * T(n / b) + O(n^d)

Where a, b and d can represent any constant.

The vast majority of the recurrences you’ll come across in coding interviews can be expressed in standard form.

The Master Method formula is….

So, going back to our MergeSort recurrence of

M(n) = 2 * M( n / 2) + O(n)

a = 2, b = 2 and d = 1.

Therefore, 2 = 2^1, which means we’re in Case 1.

Therefore, our time complexity is O(n log n). That’s it!

You should definitely memorize all 3 cases of The Master Method for your coding interviews.

If you’d like some intuition for why The Master Method works, check out this video.

Here’s the code for Binary Search.

Reply back to this email with the recurrence and the time complexity of this function so you can test your understanding!

Unfortunately, we ran out of space for today’s email (many email clients clip emails that are longer than 102kb), so no interview problem for today.

We’ll have the next question and our previous solution in our next email!