Google File System Explained

Hey Everyone,

Today we’ll be talking about

Google File System (GFS) - Google’s distributed storage system that was the core of the company until 2010.

Why GFS was created and its goals

The design of GFS

How GFS handles data mutations

Plus, a couple awesome tech snippets on

A great article to learn about Bit Manipulation

Analyzing good code comments by looking at the Redis source code.

Exercises to “git gud” at Git

Windows 11’s changes to Windows Subsystem for Linux

We have a solution to our last coding interview question on linked lists, plus a new question from Microsoft.

Quastor Daily is a free Software Engineering newsletter sends out FAANG Interview questions (with detailed solutions), Technical Deep Dives and summaries of Engineering Blog Posts.

Google File System

In 1998, the first Google index had 26 million pages. In 2000, the Google index reached a billion web pages. By 2008, Google was processing more than 1 trillion web pages.

As you might imagine, the storage needs required for this kind of processing were massive and rapidly growing.

To solve this, Google built Google File System (GFS), a scalable distributed file system written in C++. Even in 2003, the largest GFS cluster provided hundreds of terabytes of storage across thousands of machines and it was serving hundreds of clients concurrently.

GFS is a proprietary distributed file system, so you’ll only encounter it if you work at Google. However, Doug Cutting and Mike Cafarella implemented Hadoop Distributed File System (HDFS) based on Google File System and HDFS is used widely across the industry.

LinkedIn recently published a blog post on how they store 1 exabyte of data across their HDFS clusters. An exabyte is 1 billion gigabytes.

In this post, we’ll be talking about the goals of GFS and its design. If you’d like more detail, you can read the full GFS paper here.

Goals of GFS

The main goal for GFS was that it be big and fast. Google wanted to store extremely large amounts of data and also wanted clients to be able to quickly access that data.

In order to accomplish this, Google wanted to use a distributed system built of inexpensive, commodity machines.

Using commodity machines is great because then you can quickly add more machines to your distributed system (as your storage needs grow). If Google relied on specialized hardware, then there may be limits on how quickly they can acquire new machines.

To achieve the scale Google wanted, GFS would have to use thousands of machines. When you’re using that many servers, you’re going to have constant failures. Disk failures, network partitions, server crashes, etc. are an everyday occurrence.

Therefore, GFS needed to have systems in place for automatic failure recovery. An engineer shouldn’t have to get involved every time there’s a failure. The system should be able to handle common failures on its own.

The individual files that Google wanted to store in GFS are quite big. Individual files are typically multiple gigabytes and so this affected the block sizes and I/O operation assumptions that Google made for GFS.

GFS is designed for big, sequential reads and writes of data. Most files are mutated by appending new data rather than overwriting existing data and random writes within a file are rare. Because of that access pattern, appending new data was the focus of performance optimization.

Design of GFS

A GFS cluster consists of a single master node and multiple chunkserver nodes.

The master node maintains the file system’s metadata and coordinates the system. The chunkserver nodes are where all the data is stored and accessed by clients.

Files are divided into 64 megabyte chunks and assigned a 64 bit chunk handle by the master node for identification. The chunks are then stored on the chunkservers with each chunk being replicated across several chunkservers for reliability and speed (the default is 3 replicas).

The master node keeps track of the file namespace, the mappings from files to chunks and the locations of all the chunks. It also handles garbage collection of orphaned chunks and chunk migration between the chunkservers. The master periodically communicates with all the chunkservers through HeartBeat messages to collect its state and give it instructions.

An interesting design choice is the decision to use a single master node. Having a single master greatly simplified the design since the master could make chunk placement and replication decisions without coordinating with other master nodes.

However, Google engineers had to make sure that the single master node doesn’t become a bottleneck in the system.

Therefore, clients never read or write file data through the master node. Instead, the client asks the master which chunkservers it should contact. Then, the client caches this information for a limited time so it doesn’t have to keep contacting the master node.

GFS Mutations

A mutation is an operation that changes the contents or the metadata of a chunk (so a write or an append operation).

In order to guarantee consistency amongst the replicas after a mutation, GFS performs mutations in a certain order.

As stated before, each chunk will have multiple replicas. The master will designate one of these replicas as the primary replica.

Here are the steps for performing a mutation to a chunk:

The client asks the master which chunkserver is the primary chunk and for the locations of the other chunkservers that have that chunk.

The master replies with the identity of the primary chunkserver and the other replicas. The client caches this information.

The client pushes data directly to all the chunkserver replicas. Each chunkserver will store the data in an internal LRU buffer cache.

Once all the replicas have acknowledged receiving the data, the client sends a write request to the primary chunkserver. The primary chunkserver then applies the mutations to its state.

The primary chunkserver forwards the write requests to the other chunkservers. Each chunkserver then applies the mutation to their state.

The secondary chunkservers all reply to the primary chunkserver indicating that they’ve completed the operation.

The primary chunkserver replies to the client informing the client that the write was successful (or if there were errors).

GFS Interface

GFS organizes files hierarchically in directories and identifies them by pathnames, like a standard file system. The master node keeps track of the mappings between files and chunks.

GFS provides the usual operations to create, delete, open, close, read and write files.

It also has snapshot and record append operations.

Snapshot lets you create a copy of a file or directory tree at low cost.

Record append allows multiple clients to append data to a file concurrently and it guarantees the atomicity of each individual client’s append.

To learn more about Google File System, read the full paper here.

If you’d like to read about the differences between GFS and HDFS, you can check that out here.

Tech Snippets

Low Level Bit Hacks - A great article that goes through a bunch of low level bit hacks. This is a good read if you want to learn more about bit manipulation.

Hacks include

Check if an integer is even or odd

Test if if the nth bit is set

Set the nth bit

Unset the nth bit

Flip the nth bit

And more!

Writing System Software: Code Comments - In one of our last posts, we talked about Clean Code’s view on comments and how you should always try to express yourself in your code rather than with comments.

That doesn’t mean no comments, since there are concepts that can’t be expressed in code that require a comment.

This article goes through the Redis codebase and looks at the comments and categorizes them. The author then talks about which of those comment types are useful, and which are questionable.

Git Challenges - A cool platform with exercises to practice Git.

One interesting challenge is where you have a bunch of changes and you want to commit these changes in two separate commits instead of one. These changes are all in 1 file, so you can’t just git add two different files separately.

How do you do it?

Windows 11 improves WSL - If you’re a software engineer with a windows machine, you’re probably a big fan of the Windows Subsystem for Linux feature that Windows 10 added in 2016.

Windows 11 will be doubling down on this feature, making it much easier to install.

Windows 11 will also be the first production Windows build with graphics and sound support for WSL apps. (If you’re on Windows 10 and want to try it out, you can install it using these instructions)

This means you can install GUI apps from the linux command line and have them work properly.

You can read more about the architecture of this here.

Quastor Daily is a free Software Engineering newsletter sends out FAANG Interview questions (with detailed solutions), Technical Deep Dives and summaries of Engineering Blog Posts.

Interview Question

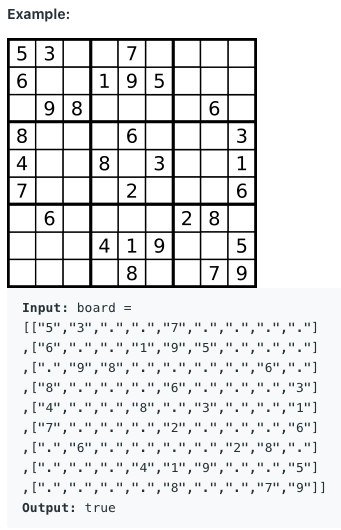

You are given a 2-D array that represents a 9x9 Sudoku board. Some of the cells in board will be filled, while others are empty.

Return true if the board is valid.

In order to be valid, it must satisfy the following rules…

Each row cannot repeat any of the digits from 1 to 9.

Each column cannot repeat any of the digits from 1 to 9.

Each 3x3 sub-box of the grid cannot repeat any of the digits from 1 to 9.

A partially filled Sudoku board can be valid as long as it doesn’t violate any of those 3 rules.

We’ll send the solution in our next email, so make sure you move our emails to primary, so you don’t miss them!

Gmail users—move us to your primary inbox

On your phone? Hit the 3 dots at the top right corner, click "Move to" then "Primary"

On desktop? Back out of this email then drag and drop this email into the "Primary" tab near the top left of your screen

A pop-up will ask you “Do you want to do this for future messages from quastor@substack.com” - please select yes

Apple mail users—tap on our email address at the top of this email (next to "From:" on mobile) and click “Add to VIPs”

Previous Solution

As a reminder, here’s our last question

You are given the head of a linked list.

You’re also given an integer n.

Remove the nth node from the end of the linked list and return its head.

Example

Input: head = [1, 2, 3, 4, 5], n = 2

Output: [1, 2, 3, 5]

Here’s the question in LeetCode

Solution

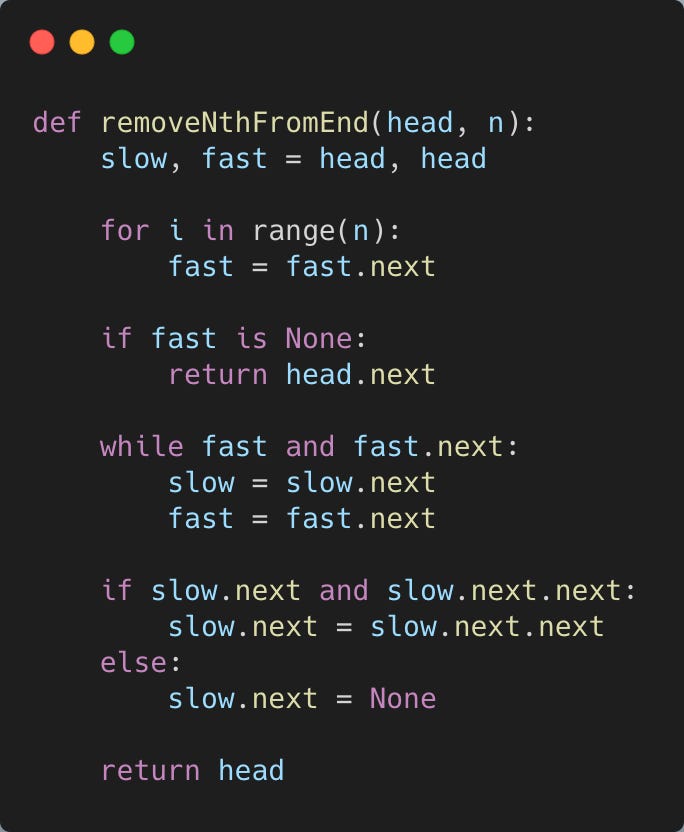

We can solve this question with a single pass of the linked list.

We do it by having two pointers, a fast pointer and a slow pointer.

The fast pointer will first traverse N nodes in our linked list.

If the fast pointer has already reached the end of the linked list, then that means the Nth node from the end of the linked list is the head node. So we’ll delete the head node and return the linked list.

Otherwise, we’ll start traversing both the slow and fast pointer through our linked list. The fast pointer will be N nodes ahead of the slow pointer.

When the fast pointer reaches the last node of our linked list, the slow pointer will be directly behind the Nth node from the end of the linked list.

We can delete the node in front of the slow pointer and then return our linked list.

Here’s the Python 3 code. What’s the time and space complexity of our solution? Reply back with your answer and we’ll tell you if you’re right/wrong.

Quastor Daily is a free Software Engineering newsletter sends out FAANG Interview questions (with detailed solutions), Technical Deep Dives and summaries of Engineering Blog Posts.