How Quora shards their MySQL database

Hey Everyone!

Today we’ll be talking about

How Quora shards their MySQL database

Scaling issues Quora had with MySQL

Splitting up their database by tables

Splitting up tables into shards

Plus, some tech snippets on

Platform Engineering 101

Building a JavaScript Testing Framework

How Postgres Chooses Which Index to use for a Query

We also have a solution to our last Facebook interview question and a new question from Apple.

Quastor is a free Software Engineering newsletter that sends out summaries of technical blog posts, deep dives on interesting tech and FAANG interview questions and solutions.

Sharding Databases at Quora

Quora is a social platform where users can post and answer questions on anything. The website receives more than 600 million visits per month.

Quora relies on MySQL to store critical data like questions, answers, upvotes, comments, etc. The size of the data is on the order of tens of terabytes (without counting replicas) and the database gets hundreds of thousands of queries per second.

Vamsi Ponnekanti is a software engineer at Quora, and he wrote a great blog post about why Quora decided to shard their MySQL database.

MySQL at Quora

Over the years, Quora’s MySQL usage has grown in the number of tables, size of each table, read queries per second, write queries per second, etc.

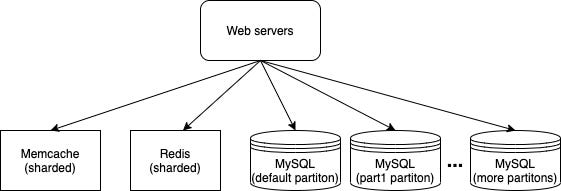

In order to handle the increase in read QPS (queries per second), Quora implemented caching using Memcache and Redis.

However, the growth of write QPS and growth of the size of the data made it necessary to shard their MySQL database.

At first, Quora engineers split the database up by tables and moved tables to different machines in their database cluster.

Afterwards, individual tables grew too large and they had to split up each logical table into multiple physical tables and put the physical tables on different machines.

We’ll talk about how they implemented both strategies.

Splitting by Table

As the read/write query load grew, engineers had to scale the database horizontally (add more machines).

They did this by splitting up the database tables into different partitions. If a certain table was getting very large or had lots of traffic, they create a new partition for that table. Each partition consists of a master node and replica nodes.

The mapping from a partition to the list of tables in that partition is stored in ZooKeeper.

The process for creating a new partition is

Use mysqldump (a tool to generate a backup of a MySQL database) to dump the table in a single transaction along with the current binary log position (the binary log or binlog is a set of log files that contains all the data modifications made to the database)

Restore the dump on the new partition

Replay binary logs from the position noted to the present. This will transfer over any writes that happened after the initial dump during the restore process (step 2).

When the replay is almost caught up, the database will cutover to the new partition and direct queries to it. Also, the location of the table will be set to the new partition in ZooKeeper.

A pro of this approach is that it’s very easy to undo if anything goes wrong. Engineers can just switch the table location in ZooKeeper back to the original partition.

Some shortcomings of this approach are

Replication lag - For large tables, there can be some lag where the replica nodes aren’t fully updated.

No joins - If two tables need to be joined then they need to live in the same partition. Therefore, joins were strongly discouraged in the Quora codebase so that engineers could have more freedom in choosing which tables to move to a new partition.

Splitting Individual Tables

Splitting large/high-traffic tables onto new partitions worked well, but there were still issues around tables that became very large (even if they were on their own partition).

Schema changes became very difficult with large tables as they needed a huge amount of space and took several hours (they would also have to frequently be aborted due to load spikes).

There were unknown risks involved as few companies have individual tables as large as what Quora was operating with.

MySQL would sometimes choose the wrong index when reading or writing. Choosing the wrong index on a 1 terabyte table is much more expensive than choosing the wrong index on a 100 gigabyte table.

Therefore, engineers at Quora looked into sharding strategies, where large tables could be split up into smaller tables and then put on new partitions.

Key Decisions around Sharding

When implementing sharding, engineers at Quora had to make quite a few decisions. We’ll go through a couple of the interesting ones here. Read the full article for more.

Build vs. Buy

Quora decided to build an in-house solution rather than use a third-party MySQL sharding solution (Vitess for example).

They only had to shard 10 tables, so they felt implementing their own solution would be faster than having to develop expertise in the third party solution.

Also, they could reuse a lot of their infrastructure from splitting by table.

Range-based sharding vs. Hash-based sharding

There are different partitioning criteria you can use for splitting up the rows in your database table.

You can do range-based sharding, where you split up the table rows based on whether the partition key is in a certain range. For example, if your partition key is a 5 digit zip code, then all the rows with a partition key between 7000 and 79999 can go into one shard and so on.

You can also do hash-based sharding, where you apply a hash function to an attribute of the row. Then, you use the hash function’s output to determine which shard the row goes to.

Quora makes frequent use of range queries so they decided to use range-based sharding. Hash-based sharding performs poorly for range queries.

How Quora Shards Tables

So, when Quora has a table that is extremely large, they’ll split it up into smaller tables and create new partitions that hold each of the smaller tables.

Here are the steps they follow for doing this

Data copy phase - Read from the original table and copy to all the shards. Quora engineers set up N threads for the N shards and each thread copies data to one shard. Also, they take note of the current binary log position.

Binary log replay phase - Once the initial data copy is done, they replay the binary log from the position noted in step 1. This copies over all the writes that happened during the data copy phase that were missed.

Dark read testing phase - They send shadow read traffic to the sharded table in order to compare the results with the original table.

Dark write testing phase - They start doing dark writes on the sharded table for testing. Database writes will go to both the unsharded table and the sharded table and engineers will compare.

If Quora engineers are satisfied with the results from the dark traffic testing, they’ll restart the process from step 1 with a fresh copy of the data. They do this because the data may have diverged between the sharded and unsharded tables during the dark write testing.

They will repeat all the steps from the process until step 3, the dark read testing phase. They’ll do a short dark read testing as a sanity check.

Then, they’ll proceed to the cutover phase where they update ZooKeeper to indicate that the sharded table is the source of truth. The sharded table will now serve read/write traffic.

However, Quora engineers will still propagate all changes back to the original, unsharded table. This is done just in case they need to switch back to the old table.

This article was published in 2020 and Quora had successfully sharded 3 large production tables before the article was written.

For more details, you can read the full article here.

Quastor is a free Software Engineering newsletter that sends out summaries of technical blog posts, deep dives on interesting tech and FAANG interview questions and solutions.

Tech Snippets

Building a JavaScript Testing Framework - Christoph Nakazawa is writing a great series on JavaScript infrastructure and this post goes over how JavaScript testing frameworks work. He builds a toy JavaScript testing framework with 100 lines of code that is similar to Jest.

Platform Engineering 101 - This is a great interview with Zoe Sobin, a Senior Engineering Manager at HubSpot. Zoe talks about platform engineering at HubSpot and how to manage platform teams at your company.

How Postgres Chooses Which Index to Use For a Query - When you send an SQL query to a Postgres database, there are usually multiple ways for Postgres to execute that query. This is a great article that dives into how Postgres decides which query plan to use.

Interview Question

Implement a BSTIterator class that represents an iterator over the in-order traversal of a Binary Search Tree.

You should implement three functions

BSTIterator - the constructor of the class

hasNext - returns True if there is a number in the traversal to the right of the pointer.

next - moves the pointer to the right and then returns the number at the pointer.

Here’s the question in LeetCode.

Previous Question

As a refresher, here’s the previous question

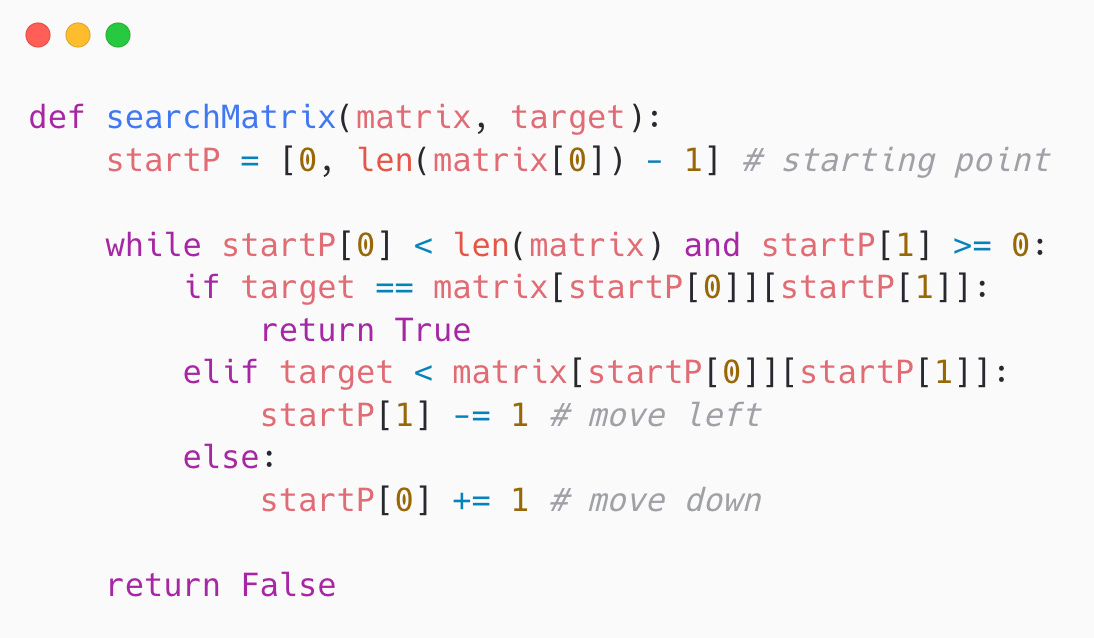

Write an algorithm that searches for a target value in an m x n integer matrix.

The matrix will have the following properties

integers in each row are sorted in ascending from left to right

integers in each column are sorted in ascending from top to bottom

Here’s the question on LeetCode.

Solution

We can solve this question by starting at the top-right of our array.

All the integers to the left of our starting point are less than the starting point.

All the integers below our starting point are greater than the starting point.

We compare our target value with the starting point.

if they’re equal, then we return True

if the target is less than our starting point, we move left

if the target is greater than our starting point, we go down

If we’ve exceeded the bounds of our matrix, then we return False.

The time complexity is O(m + n) because every iteration we eliminate one row or one column when we move down or left.

Quastor is a free Software Engineering newsletter that sends out summaries of technical blog posts, deep dives on interesting tech and FAANG interview questions and solutions.